Question: If I had a container, full with air, and I suddenly decreased the volume of the container, forcing the air into a smaller volume, will it be considered as compression, will it result in an increase in temperature, and why?

Answer on Stack Exchange by Luboš Motl: Yes, it is compression and yes, it will heat up the gas.

If there’s no heat exchange between the gas and the container (or the environment), we call it an adiabatic process. For an adiabatic process involving an ideal gas (which is a very good approximation for most common gases), pVγ is constant where γ is an exponent such as 5/3. Because the temperature is equal to T=pV/nR and pV/pVγ=V1−γ is a decreasing function of V, the temperature will increase when the volume decreases.

Macroscopically, the heating is inevitable because one needs to perform work p|dV| to do the compression, the energy has to be preserved, and the only place where it can go is the interior of the gas given by a formula similar to (3/2)nRT.

Microscopically, the gas molecules are normally reflected from the walls of the container with the same kinetic energy. However, the molecules that hit the wall moving “against them” during compression will recoil with a greater velocity. If one calculates the average energy gain for the molecules, one gets the same temperature increase as that which follows from the macroscopic calculation.

________________________________________________

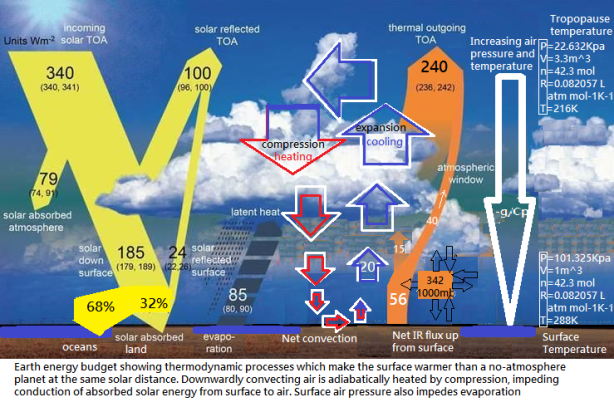

Taking Luboš’ answer and applying it to the Earth’s atmosphere, we can start to understand why Ned Nikolov and Karl Zeller have been able to find a function which predicts surface temperature on rocky planets and moons throughout the solar system using insolation and surface pressure as explained in their 2017 paper.

The Sun heats the Earth’s land and ocean surfaces. They lose heat into the lower atmosphere via several energy transfer mechanisms, roughy 2/3 via evaporation and conduction, 1/3 via radiation. The rate of transfer via evaporation and conduction is dependent on another process; convection.

Convection arises due to the buoyancy of water vapour and warmer air, which are both lighter than the cooler surrounding air. In turn, buoyancy is due to the gradient of pressure in the atmosphere, and that gradient is due to gravity acting on the mass of the atmosphere. The surface air has all the mass of the entire column bearing down on it under the force of gravity, and so reaches the highest pressure, which reduces with altitude.

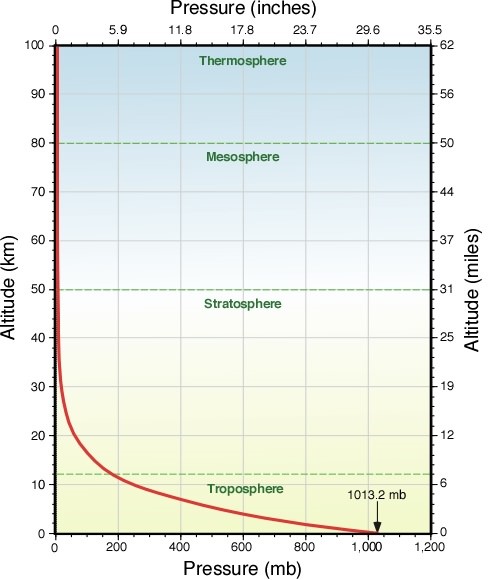

As you can see from the figure, pressure decreases exponentially with altitude, though it is fairly linear up as far as the tropopause, the boundary between the troposphere and stratosphere.

Because the pressure drops with altitude, the warm higher pressure air convecting up from the surface expands into this lower pressure regime at higher altitude and cools. The water vapour in the air condenses as the temperature drop to the dew point, releasing the latent heat of evaporation it acquired at the ocean surface as the latent heat of condensation. That heat is then efficiently radiated out to space from the cloud tops through the rarified upper atmosphere.

So far, so well known. A less commonly considered effect is that the rising warm air displaces cooler air from high altitude back down to the surface. On its way, it gets compressed by the higher pressure regime it is descending into. As Luboš explains, this causes the descending air to heat up. How big is this effect? The numbers on the right of the diagram at the head of the post tell us.

3.3 cubic metres of low pressure cold air from the tropopause get compressed into 1 cubic metre of air back down at the surface. It warms from a chilly 216K to a pleasant 288K. But Luboš tells us that to perform the compression, work must be done. So what is supplying the energy to do the work? The answer is the Sun, which drives this cycle of upward and downward convection. The hot dry air arriving back at surface at the boundary between the Hadley and Ferrel cells where the effect is particularly strong used to cause the dehydration and heat exhaustion of animals being transported by ship across the ‘horse latitudes’. That’s how they got their name.

Let’s imagine a world where this adiabatic cooling and heating didn’t exist. Where the cold high altitude air returning to surface was still cold. That would create a big differential between the temperature of the surface air and the surface of the land and ocean. Where the temperature differential is large, conduction of heat is rapid, so the surface would cool quickly into the air. But because the descending air is adiabatically heated by compression, the differential is much lower, and this means conduction occurs slowly.

To be in equilibrium with the air, the surface has to lose heat as quickly as it gains it from the Sun. To do that it there has to be a sufficient differential in the temperature of the surface and the near surface air for conduction to happen fast enough. if there isn’t sufficient differential, the temperature of the surface will be forced to rise until there is. This is a lot of the reason why the surface temperature of Earth is much higher than that of our airless Moon, despite the Moon being at the same average distance from the Sun and not having a 30% cloud albedo reflecting heat back out to space before it reaches the surface.

Of course, as the surface temperature rises, it will also radiate more energy as well as conduct more energy and it’ll also evaporate more water. The consequences of those effects will be covered in following Thermodynamics 101 posts.

_________________________________________________

Disclaimer: No conservation of energy or other physical law was broken in the production of this post.

via Tallbloke’s Talkshop

July 16, 2018 at 04:30AM