Guest Post by Willis Eschenbach

Through what in my life is a typical series of misunderstandings and coincidences, I ended up looking at the average model results from the Climate Model Intercomparison Project 5 (CMIP5). I used the model-by-model averages from each of the four scenarios, a total of 38 results. The common period of these results is 1860 to 2100 or some such number. I used the results from 1860 to 2020, so I could see how the models were doing without looking at some imaginary future. The CMIP5 analysis was done a few years ago, so everything up to 2012 they had actual data for. So the 163 years from 1860 to 2012 were a “hindcast” using actual forcing data, and the eight years from 2013 to 2020 were forecasts.

Figure 1. CMIP5 scenario averages by model, plus the overall average.

There were several things I found interesting about Figure 1. First was the large spread. Starting from a common baseline, by 2020 the model results ranged from 1°C of warming to 1.8°C of warming …

Given that horrible inter-model temperature spread in what is a hindcast up to 2012 plus eight years of forecasting, why would anyone trust the models for what will happen by the year 2100?

The other thing that interested me was the yellow line, which reminded me of my post entitled “Life Is Like A Black Box Of Chocolates“. In that post I discussed the idea of a “black box” analysis. The basic concept is that you have a black box with inputs and outputs, and your job is to figure out some procedure, simple or complex, to transform the input into the output. In the present case, the “black box” is a climate model, the inputs are the yearly “radiative forcings” from aerosols and CO2 and volcanoes and the like, and the outputs are the yearly global average temperature values.

That same post also shows that the model outputs can be emulated to an extremely high degree of fidelity by simply lagging and rescaling the inputs. Here’s an example of how well that works, from that post.

Figure 2. Original Caption: “CCSM3 model functional equivalent equation, compared to actual CCSM3 output. The two are almost identical.”

So I got a set of the CMIP5 forcings and used them to emulate the average of the CMIP5 models (links to models and forcings in the Technical Notes at the end). Figure 3 shows that result.

Figure 3. Average of CMIP5 files as in Figure 1, along with black box emulation.

Once again it is a very close match. Having seen that, I wanted to look at some individual results. Here is the first set.

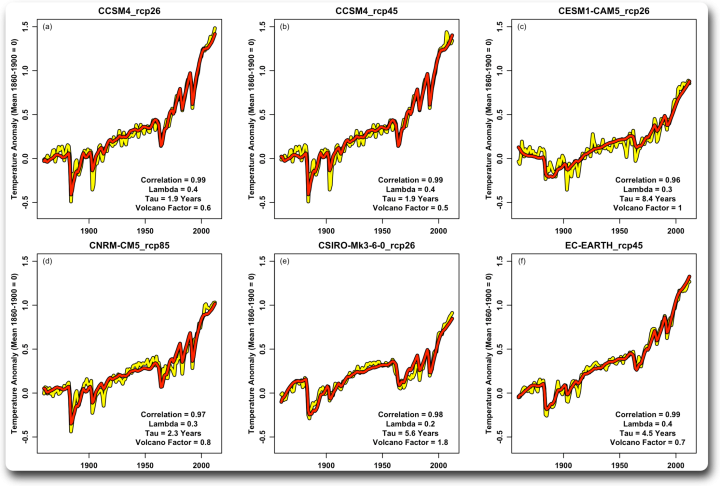

Figure 4. Six scenario averages from different models.

An interesting aspect of this is the variation in the volcano factor. The models seem to handle the forcing from short-term events like volcanoes differently than the gradual increase in overall forcing. And the individual models differ from each other, with the forcing in this group ranging from 0.5 (half the volcanic forcing applied) to 1.8 (eighty percent extra volcanic forcing applied). The correlations are all quite high, ranging from 0.96 to 0.99. Here’s a second group.

Figure 5. Six more scenario averages from different models.

Panel (a) at the top left is interesting, in that it’s obvious that the volcanoes weren’t included in the forcing for that model. As a result, the volcanic forcing factor is zero … and the correlation is still 0.98.

What this shows is that despite their incredible complexity and their thousands and thousands of lines of code and their 20,000 2-D gridcells times 60 layers equals 1.2 million 3-D gridcells … their output can be emulated in one single line of code, viz:

T(n+1) = T(n)+λ ∆F(n+1) / τ + ΔT(n) exp( -1 / τ )

OK, now lets unpack this equation in English. It looks complex, but it’s not.

T(n) is pronounced “T sub n”. It is the temperature “T” at time “n”. So T sub n plus one, written as T(n+1), is the temperature during the following time period. In this case we’re using years, so it would be the next year’s temperature.

F is the radiative forcing from changes in volcanoes, aerosols, CO2, and other factors, measured in watts per square metre (W/m2). This is the total of all of the forcings under consideration. The same time convention is followed, so F(n) means the forcing “F” in time period “n”.

Delta, or “∆”, means “the change in”. So ∆T(n) is the change in temperature since the previous period, or T(n) minus the previous temperature T(n-1). Correspondingly, ∆F(n) is the change in forcing since the previous time period.

Lambda, or “λ”, is the scale factor. Tau, or “τ”, is the lag time constant. The time constant establishes the amount of the lag in the response of the system to forcing. And finally, “exp (x)” means the number 2.71828 to the power of x.

So in English, this means that the temperature next year, or T(n+1), is equal to the temperature this year, T(n), plus the immediate temperature increase due to the change in forcing, λ F(n+1) / τ, plus the lag term, ΔT(n) exp( -1 / τ ) from the previous forcing. This lag term is necessary because the effects of the changes in forcing are not instantaneous.

Curious, no? Millions of gridcells, hundreds of thousands of lines of code, a supercomputer to crunch them … and it turns out that their output is nothing but a lagged (tau) and rescaled (lambda) version of their input.

Having seen that, I thought I’d use the same procedure on the actual temperature record. I’ve used the Berkeley Earth global average surface air temperature record, although the results are very similar using other temperature datasets. Figure 6 shows that result.

Figure 6. The Berkeley Earth temperature record, and the emulation using the same forcing as in the previous figures. I’ve included Figure 3 on the right for comparison.

It turns out that the model average is much more sensitive to the volcanic forcing, and has a shorter time constant tau. And of course, since the earth is a single example and not an average, it contains much more variation and thus a slightly lower correlation with the emulation (0.94 vs 0.99).

So does this show that forcings actually rule the temperature? Well … no, for a simple reason. The forcings have been chosen and refined over the years to give a good fit to the temperature … so the fact that it fits has no probative value at all.

One final thing we can do. IF the temperature is actually a result of the forcings, then we can use the factors above to estimate what the long-term effect of a sudden doubling of CO2 will be. The IPCC says that this will increase the forcing by 3.7 watts per square meter (W/m2). We simply use a step function for the forcing with a jump of 3.7 W/m2 at a given date. Here’s that result, with a jump of 3.7 W/m2 in the model year 1900.

Figure 7. Long-term change in temperature from a doubling of CO2, using 3.7 W/m2 as the increase in forcing and calculated with the lambda and tau values for the Berkeley Earth and CMIP5 Model Average as shown in Figure 6.

Note that with the larger time constant Tau, the real earth (blue line) takes longer to reach equilibrium, on the order of 40 years, than using the CMIP5 model average value. And because the real earth has a larger scale factor Lambda, the end result is slightly larger.

So … is this the mysterious Equilibrium Climate Sensitivity (ECS) we read so much about? Depends. IF the forcing values are accurate and IF forcing roolz temperature … maybe they’re in the ballpark.

Or not. The climate is hugely complex. What I modestly call “Willis’s First Law Of Climate” says:

Everything in the climate is connected with everything else … which in turn is connected with everything else … except when it’s not.

And now, me, I spent the day pressure-washing the deck on the guest house, and my lower back is saying “LIE DOWN, FOOL!” … so I’ll leave you with my best wishes for a wonderful life in this endless universe of mysteries.

w.

My Usual: When you comment please quote the exact words you are discussing. This avoids many of the misunderstandings which are the bane of the intarwebs …

Technical Notes:

I’ve put all of the modeled temperatures and forcing data and a working example of how to do the fitting as an Excel xlsx workbook in my Dropbox here.

Forcings Source: Miller et al.

Model Results Source: KNMI

Model Scenario Averages Used: (Not all model teams provided averages by scenario)

CanESM2_rcp26

CanESM2_rcp45

CanESM2_rcp85

CCSM4_rcp26

CCSM4_rcp45

CCSM4_rcp60

CCSM4_rcp85

CESM1-CAM5_rcp26

CESM1-CAM5_rcp45

CESM1-CAM5_rcp60

CESM1-CAM5_rcp85

CNRM-CM5_rcp85

CSIRO-Mk3-6-0_rcp26

CSIRO-Mk3-6-0_rcp45

CSIRO-Mk3-6-0_rcp60

CSIRO-Mk3-6-0_rcp85

EC-EARTH_rcp26

EC-EARTH_rcp45

EC-EARTH_rcp85

FIO-ESM_rcp26

FIO-ESM_rcp45

FIO-ESM_rcp60

FIO-ESM_rcp85

HadGEM2-ES_rcp26

HadGEM2-ES_rcp45

HadGEM2-ES_rcp60

HadGEM2-ES_rcp85

IPSL-CM5A-LR_rcp26

IPSL-CM5A-LR_rcp45

IPSL-CM5A-LR_rcp85

MIROC5_rcp26

MIROC5_rcp45

MIROC5_rcp60

MIROC5_rcp85

MPI-ESM-LR_rcp26

MPI-ESM-LR_rcp45

MPI-ESM-LR_rcp85

MPI-ESM-MR_rcp45

via Watts Up With That?

February 3, 2022 at 12:43PM